Master projects and master theses in spring + fall semester 2025

In the spring semester 2025 and fall semester 2025, students are currenlty working on the following topics as part of their master's projects and theses:

- Pipelines for interpretable machine learning in psychological research

- Evaluation of significance tests for Shapley values

Fall semester 2024 / Luana Brunelli: Reliability measures in intensive longitudinal data -- Understanding generalizability and multilevel theory with interactive tools

In psychological research, the use of intensive longitudinal data (ILD) is becoming increasingly common to capture within-person dynamics over time. However, this growing interest in ILD highlights the need for reliable measurement across repeated time points. If reliability is low, conclusions drawn from ILD can be misleading or unstable.

This project explores advanced approaches to estimating reliability in ILD settings, drawing on both Generalizability Theory and Multilevel Modeling. These frameworks take into account the nested nature of ILD—observations nested within individuals—and provide more accurate estimates of measurement consistency over time.

Through a comprehensive simulation study, we examined how key design choices—such as the number of participants, measurement occasions, and measurement error—influence the stability and accuracy of different reliability estimates. We also investigated how interactions between persons and items can systematically impact the interpretation of reliability across methods.

To support applied researchers, we developed a fully functional Shiny application that allows users to interactively explore how various design parameters affect reliability outcomes. This tool enables researchers to simulate scenarios, visualize outcomes, and make informed decisions about study design and reliability assessment. The simulation-based findings are illustrated in an interactive Shiny app, which can be accessed here: https://sdsunibas.shinyapps.io/luana/

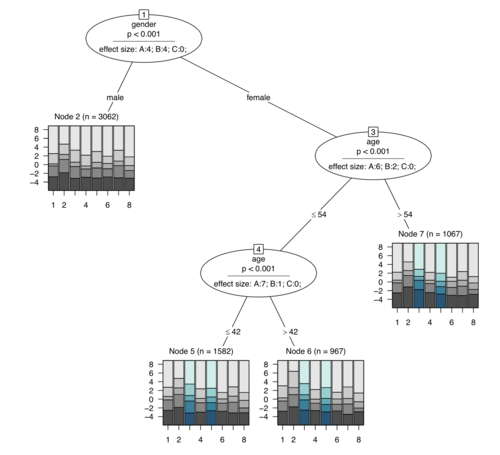

Spring semester 2024 / Jan Radek: Partial Credit trees meet the partial gamma coefficient

In psychology, tests such as personality or language assessments undergo evaluation before being released to the public. A common step in this evaluation process is to determine whether all items are fair across different groups of individuals. If this is not the case for an item in a test, it is referred to as differential item functioning (DIF) or differential step functioning (DSF).

In this project, we focus on Partial Credit Trees (PCtree), a method that combines the partial credit measurement model from the item response theory with decision trees from machine learning. We extended PCtrees by incorporating an effect size measure for DIF/DSF in polytomous items, namely the partial gamma coefficient from psychometrics. Now researchers can see which items are affected by DIF/DSF and assess the meaningfulness the DIF/DSF effect size.

During his internship, Jan showed in a series of simulation studies that the partial gamma coefficient aids researchers in evaluating whether splits in the tree are meaningful, identifying DIF and DSF items, and preventing unnecessary tree growth when effect sizes are negligible. Additionally, we also addressed the issue of item-wise testing corrections, especially in longer assessments and illustrated the new method using data from the LISS panel.